Mentally Preparing Your Organization for Evaluation Part 1 (of 5)

Four Big Blockers That Can Derail Evaluation Attempts

In my 20+ years in the field, I’ve seen countless nonprofit (and government!) organizations fail to achieve any kind of traction with evaluating their work (including wasting money hiring outside evaluators who don’t end up producing usable data). Don’t get me wrong – these organizations are doing great work. And they likely are great at telling the story of their work through anecdotal evidence, heart-tugging emotional narrative, and success examples. But just as likely, they are not producing any statistical evidence to back up the narrative. They are not producing hard data that their theory of change or program design or program implementation is effecting actual change – data that is absolutely needed to attract major funders and long-term investments. And, in my experience, there is ONE THING that leads to this failure:

An overestimation of the evaluation-specific knowledge needed and an underestimation of the capacity-building / organizational-development / systems-thinking knowledge needed to implement outcome evaluation.

Almost always, when I start working with an organization and I insist they build evaluation capacity into the bones of their organization by training program managers and department heads on evaluation, I get the same pushback: but these staff don’t know anything about evaluation, they don’t have time to learn / become researchers, and/or they aren’t interested in learning about “math” and “data” (terms often said with a bit of a sneer).

And sure, yes, at the higher levels, evaluation work requires specialized knowledge of research methodologies and data analysis techniques – things like statistical significance. Correlation. Bias reduction in instrument design. Knowledge of experimental research design (control groups and the like). But there is no need to jump to that right out of the gate. There is a LOT of evaluation that can be done with a simple lay person’s understanding of some basic statistical analysis concepts (mean / average, median (middle), mode (most frequent), percent change), knowledge of Excel or Googlesheets charting functionality, and a lay person’s understanding of evaluation (such as outputs versus outcomes and the various types of evaluation) (knowledge I’ve provided in my last two months’ worth of posts).

On the other hand, there is almost NO evaluation that can be done if evaluation, even in its simplest form – such as a customer satisfaction survey, is not approached as an organizational capacity building initiative. Too often, organizations think “All we’re doing is giving out a short customer-satisfaction survey at the end of every workshop / class / program cohort. That’s easy. It requires almost no additional capacity, right? The workshop / program facilitator / teacher will just need to hand out the paper form at the end of the class / program and collect the completed forms.”

Ah… but then someone needs to read / review the evaluations to see what was said. How are they going to do that? Visually review each and every form or does the data need to be collated up first in some form? And who will do that? And what tool will be used to collate the data?

And then maybe someone should calculate the average score given to each question. Who will do that? And are they doing it via calculator/hand or using some kind of reporting / analysis tool? If they are typing the responses into a spreadsheet, how long will that take per form/per cohort of forms? If you have a 5-question form and 25 participations, that is 125 entries into a spreadsheet – that need to be entered, then reviewed/double checked for accuracy. That’s not a negligible amount of time. That’s probably at least an hour of work. How frequently will this happen? Does the program run weekly, monthly, quarterly? So it’s an extra hour of work per class / cohort every… week, month, quarter that someone on staff has to absorb. If you have a 10-question form and 100 participants, now we’re up to 1,000 entries into a spreadsheet. That’s going to be 2-4 hours of work every cohort.

And then the scores should probably be looked at over time – are they getting better or worse. Who is going to do those calculations, make those charts / graphs?

And then, after the data is entered and the data crunched, the results need to be shared. Does the person crunching the data need to send an email informing other staff (program manager and/or department head and/or executive director and/or board) of the results? Does the data cruncher need to create a report of the results – charts/graphs, narrative explanation / context, et cetera? How long will it take to send that email and/or prepare that report? Do the results need to be discussed at a meeting – is the meeting a standing one already scheduled or does a meeting need to be scheduled for the discussion? Does the person presenting the results need to prepare for the meeting – print handouts, prepare remarks? How much time will the discussion take on the meeting agenda? Is the data discussion pushing other things off the agenda or increasing the amount of time the meeting needs to take to cover all the other topics since the data discussion has taken up some of the meeting time?

Is there follow up that will need to happen after the discussion – changes to the workshop / program and/or planning meetings to figure out how to improve an underperforming program / planning meetings to figure out how to apply the program’s strengths to other programs?

As you can see, even just one simple, five-to-ten question customer satisfaction survey can add up to a considerable amount of extra work daily/weekly/monthly/quarterly on a number of staff throughout the organization, and all too often, organizations don’t think through the time-consuming ripples that this new work will create (and therefore don’t adequately resource the work), especially when they only look at the “input” to the system (the five-question survey) and not the entire evaluative process.

It’s this failure to think-through the additional workload (and ensure capacity at every stage) of the evaluative process as data moves through the evaluation ecosystem that most often dooms evaluation initiatives to failure. And the resourcing of the work is just one facet of the impact of evaluation work on the organization – there are, as with any organizational development or systems change work, many others.

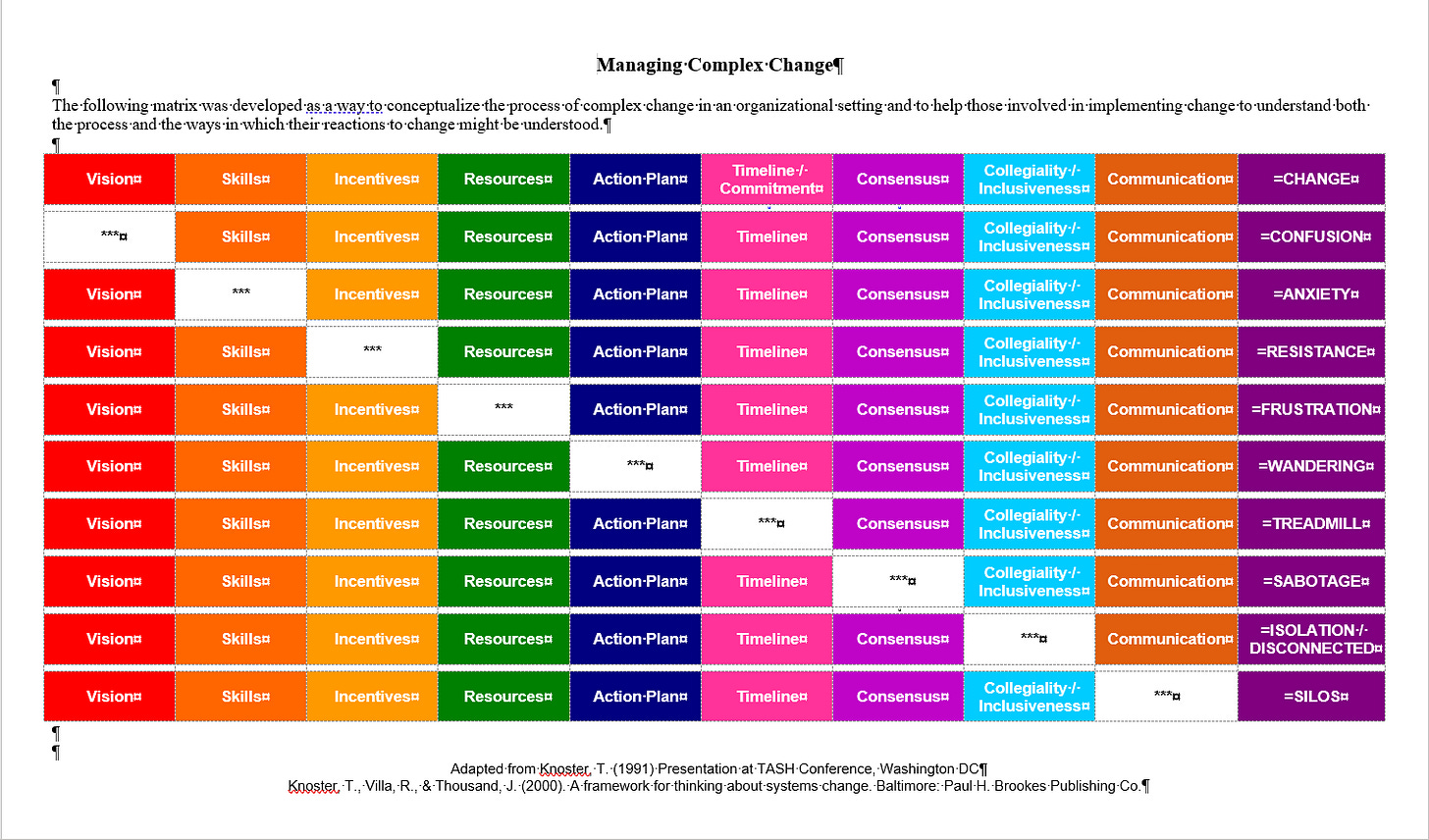

See, for example, the Lippett – Villa – Thousand – Knoster “Managing Complex Change Chart” (pictured below) for other facets of change management that need to be addressed / stewarded when implementing an evaluation schema (the version pictured below is one I created by synthesizing several different versions of the Lippett et al change management charts I’ve come across over the years and my own knowledge / experience with change management – we’ll do a deeper dive on this chart in Week 2 of this month).

For the rest of this month, each week, we’ll take a look at the four most common organizational development-related evaluation blockers – how they present / block evaluation work and how to address them (ideally, BEFORE you start implementing evaluation) through a weekly 10-minute vlog and some hands-on exercises and discussion questions.

Week of July 4th: Fear of Losing “The Magic”

Week of July 11th: Lippett et al Change Management Chart

Week of July 18th: Importance of Consistency

Week of July 25th: “Magic Fairy Dust” Fallacy

Week of July 31st: Bonus Content!

We kick it off with this week’s supplemental vlog in which I share what the collabortive approach to horse ownership in St. Croix can teach us about balancing evaluative rigor with the “secret sauce” that makes an organization’s programs work. Click below (or click here to view on YouTube) to watch this week’s video and then check out the exercises and discussion questions below !

Exercises / Discussion Questions:

Has your organization’s staff or volunteers ever expressed fears that adding evaluation, standard operating procedures and/or a procedural manual, and/or routinazation will stifle or destroy the “magic” of your organization (its “vibe,” energy, agility, etc.)? How did you address those fears?

Certainly, while routinization, standardization, evaluation / assessment, and/or Standard Operating Procedures can make an organization less chaotic and more effective, they can also make an organization less agile, less flexible, more hierarchical, and /or even less creative / less willing to take risks. What metric(s) / measurement tool(s) could you use to know if “the magic” / organizational effectiveness is, indeed, being reduced by the addition of evaluation, SoPs, standardization, etc.? What would be the “sweet spot” between chaos and moribund for your organization? How could you measure whether or not your organization is staying in that effectively balanced zone?

Terri,

I've written to you before because your newsletter strikes such a cord in me. Some thoughts:

-Having the discussion early in the process to get people excited about evaluation as a means to provide better services to people. At our Charter Schools we had teachers who used test results to build a better curriculum or cover areas that needed improvement.

-In the "top gun" school of aerial combat they had to show that "flying by instinct" was not as good as evaluating actual performance. There is no such thing as losing the "magic" there is only the ability to improve. Beyond the "magic" there is the fear of change.

-Getting people to see that change can happen in small bites so if people see small successes and positive reward they will be willing to take on larger challenges.

Keep up the good work. Eric